Segmentation

- Train network:

PhagoPred.detectron_segmentation.train - Segment dataset:

PhagoPred.detectron_segmentation.segment - Evaluate performance:

PhagoPred.detectron_segmentation.kfold

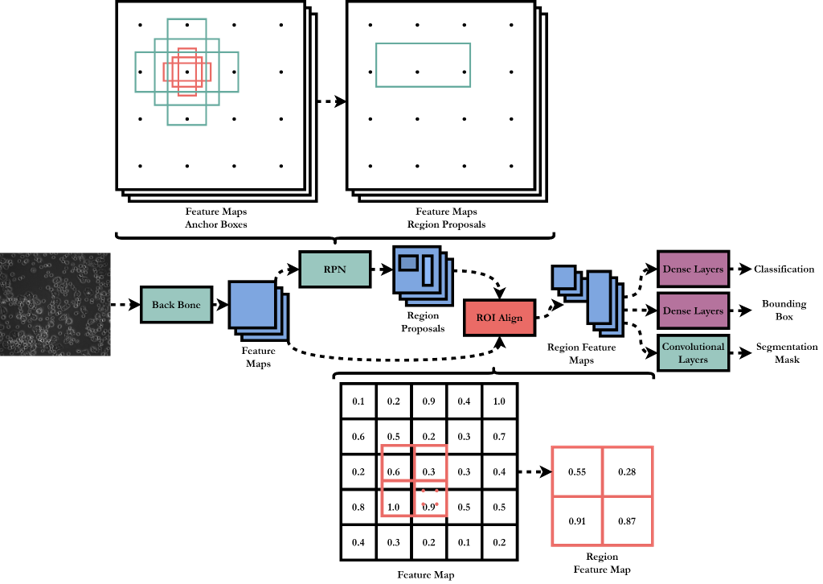

Mask R-CNN

Each image is segmented with a Mask R-CNN network, implemented using Detectron2 and pretrained on 2.5 million instances of objects (the COCO Datset). This allows the network to achieve reasonable segmentation performance when fine tuned on ~500 instances of macrophages.

Architecture Overview

- Backbone – A pretrained convolutional neural network classifier, which is truncated before outputting a class. This results in a feature map of the total image.

- Region Proposal Network – From the feature map, a set of rectangular region proposals are extracted, each with an associated probability of containing an object. The network is again convolutional, with a regressor branch to adjust the proposed region shape, and classifier to give the object probability.

- ROI Align – The region proposals are used to extract sections of the feature map. ROI Align provides an improvement over previous methods for this step by interpolating the feature map to allow the exact proposed region to be extracted.

- Output – Each extracted feature map is then passed through dense layers to give a class and bounding box prediction. Convolutional layers output a segmentation mask.

Performance

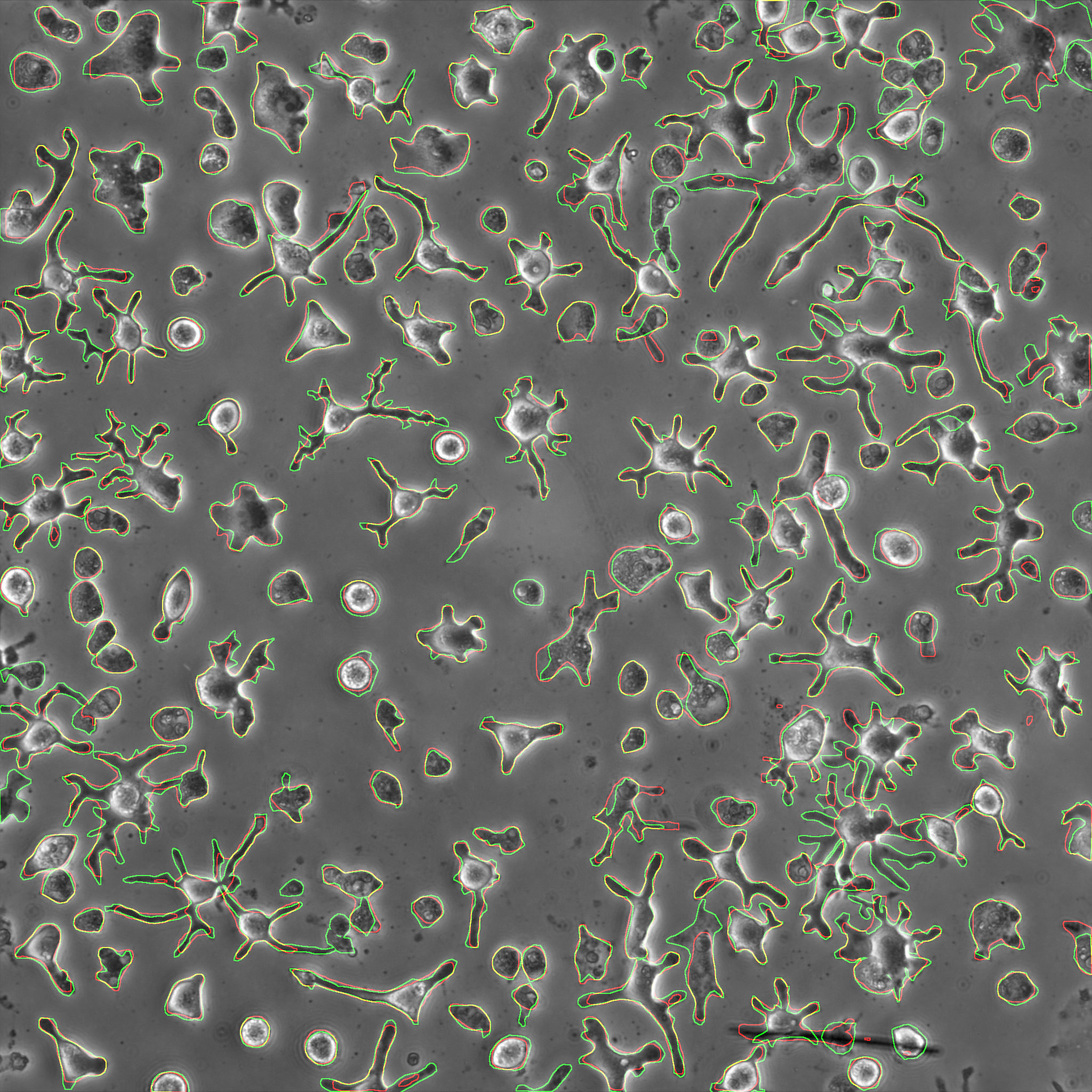

Ground Truth | Prediction | Overlap of ground truth and prediction

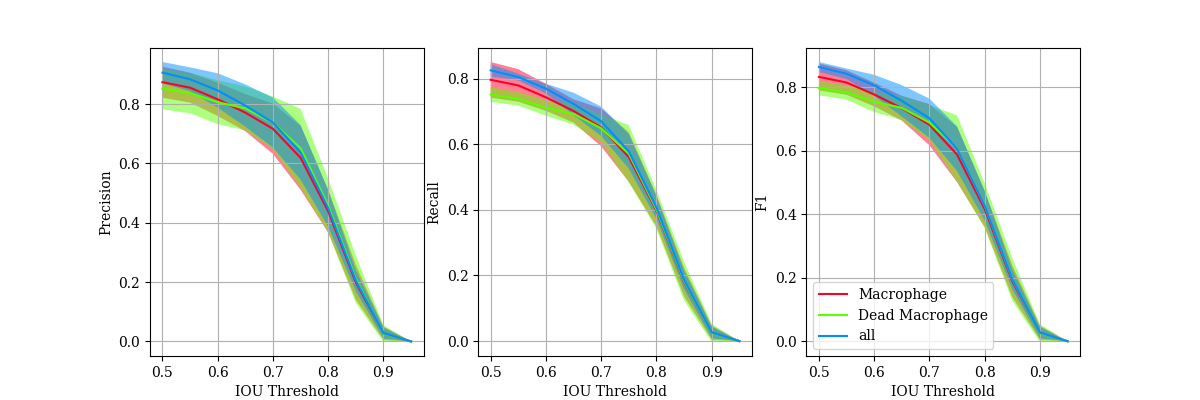

In order to quantitatively evaluate the performance, precision, recall and F1-score are calculated.

\[Precision = \frac{Correct \: Predictions}{Total \: Predictions} = \frac{TP}{TP+FP}\] \[Recall = \frac{Correct \: Predictions}{Total \: Ground \: Truth} = \frac{TP}{TP + FN}\] \[F1 = \frac{2 \times Precision \times Recall}{Precision + Recall}\]These require each instance to be classed as a True Positive, False Positive or False Negative. Therefore, they are calucated over a range of Intersection over Union (IOU) thresholds.

\[IoU = \frac{Area \: of \: Intersection}{Area \: of \: Union}\]

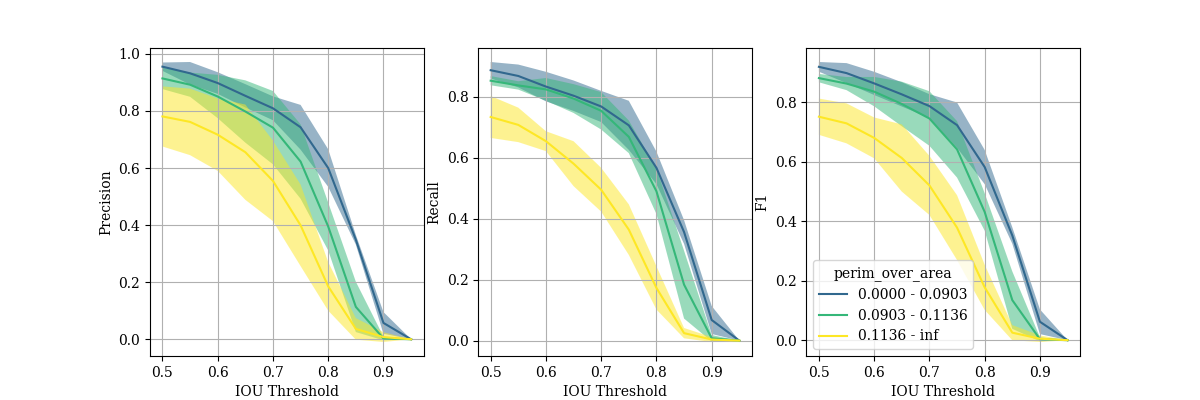

These metrics can also be calculated for subsets of the cells, allowing the effect of individual cell features on segmentation peroformance to be determined. Below are precision-recall curves for three subsets of cells with different ranges of perimeter over area. Those with the highest perimeter over area (so less circular shape) performed significantly worse, suggesting more training data containing these sorts of cells should be included.

Next: Tracking